-

class sklearn.feature_selection.RFECV(estimator, step=1, cv=None, scoring=None, verbose=0, n_jobs=1)[source] -

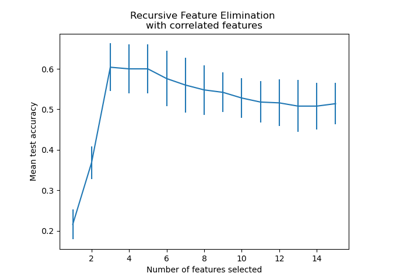

Feature ranking with recursive feature elimination and cross-validated selection of the best number of features.

Read more in the User Guide.

Parameters: estimator : object

A supervised learning estimator with a

fitmethod that updates acoef_attribute that holds the fitted parameters. Important features must correspond to high absolute values in thecoef_array.For instance, this is the case for most supervised learning algorithms such as Support Vector Classifiers and Generalized Linear Models from the

svmandlinear_modelmodules.step : int or float, optional (default=1)

If greater than or equal to 1, then

stepcorresponds to the (integer) number of features to remove at each iteration. If within (0.0, 1.0), thenstepcorresponds to the percentage (rounded down) of features to remove at each iteration.cv : int, cross-validation generator or an iterable, optional

Determines the cross-validation splitting strategy. Possible inputs for cv are:

- None, to use the default 3-fold cross-validation,

- integer, to specify the number of folds.

- An object to be used as a cross-validation generator.

- An iterable yielding train/test splits.

For integer/None inputs, if

yis binary or multiclass,sklearn.model_selection.StratifiedKFoldis used. If the estimator is a classifier or ifyis neither binary nor multiclass,sklearn.model_selection.KFoldis used.Refer User Guide for the various cross-validation strategies that can be used here.

scoring : string, callable or None, optional, default: None

A string (see model evaluation documentation) or a scorer callable object / function with signature

scorer(estimator, X, y).verbose : int, default=0

Controls verbosity of output.

n_jobs : int, default 1

Number of cores to run in parallel while fitting across folds. Defaults to 1 core. If

n_jobs=-1, then number of jobs is set to number of cores.Attributes: n_features_ : int

The number of selected features with cross-validation.

support_ : array of shape [n_features]

The mask of selected features.

ranking_ : array of shape [n_features]

The feature ranking, such that

ranking_[i]corresponds to the ranking position of the i-th feature. Selected (i.e., estimated best) features are assigned rank 1.grid_scores_ : array of shape [n_subsets_of_features]

The cross-validation scores such that

grid_scores_[i]corresponds to the CV score of the i-th subset of features.estimator_ : object

The external estimator fit on the reduced dataset.

Notes

The size of

grid_scores_is equal to ceil((n_features - 1) / step) + 1, where step is the number of features removed at each iteration.References

[R168] Guyon, I., Weston, J., Barnhill, S., & Vapnik, V., ?Gene selection for cancer classification using support vector machines?, Mach. Learn., 46(1-3), 389?422, 2002. Examples

The following example shows how to retrieve the a-priori not known 5 informative features in the Friedman #1 dataset.

>>> from sklearn.datasets import make_friedman1 >>> from sklearn.feature_selection import RFECV >>> from sklearn.svm import SVR >>> X, y = make_friedman1(n_samples=50, n_features=10, random_state=0) >>> estimator = SVR(kernel="linear") >>> selector = RFECV(estimator, step=1, cv=5) >>> selector = selector.fit(X, y) >>> selector.support_ array([ True, True, True, True, True, False, False, False, False, False], dtype=bool) >>> selector.ranking_ array([1, 1, 1, 1, 1, 6, 4, 3, 2, 5])Methods

decision_function(\*args, \*\*kwargs)fit(X, y)Fit the RFE model and automatically tune the number of selected features. fit_transform(X[, y])Fit to data, then transform it. get_params([deep])Get parameters for this estimator. get_support([indices])Get a mask, or integer index, of the features selected inverse_transform(X)Reverse the transformation operation predict(\*args, \*\*kwargs)Reduce X to the selected features and then predict using the underlying estimator. predict_log_proba(\*args, \*\*kwargs)predict_proba(\*args, \*\*kwargs)score(\*args, \*\*kwargs)Reduce X to the selected features and then return the score of the underlying estimator. set_params(\*\*params)Set the parameters of this estimator. transform(X)Reduce X to the selected features. -

__init__(estimator, step=1, cv=None, scoring=None, verbose=0, n_jobs=1)[source]

-

fit(X, y)[source] -

- Fit the RFE model and automatically tune the number of selected

- features.

Parameters: X : {array-like, sparse matrix}, shape = [n_samples, n_features]

Training vector, where

n_samplesis the number of samples andn_featuresis the total number of features.y : array-like, shape = [n_samples]

Target values (integers for classification, real numbers for regression).

-

fit_transform(X, y=None, **fit_params)[source] -

Fit to data, then transform it.

Fits transformer to X and y with optional parameters fit_params and returns a transformed version of X.

Parameters: X : numpy array of shape [n_samples, n_features]

Training set.

y : numpy array of shape [n_samples]

Target values.

Returns: X_new : numpy array of shape [n_samples, n_features_new]

Transformed array.

-

get_params(deep=True)[source] -

Get parameters for this estimator.

Parameters: deep : boolean, optional

If True, will return the parameters for this estimator and contained subobjects that are estimators.

Returns: params : mapping of string to any

Parameter names mapped to their values.

-

get_support(indices=False)[source] -

Get a mask, or integer index, of the features selected

Parameters: indices : boolean (default False)

If True, the return value will be an array of integers, rather than a boolean mask.

Returns: support : array

An index that selects the retained features from a feature vector. If

indicesis False, this is a boolean array of shape [# input features], in which an element is True iff its corresponding feature is selected for retention. Ifindicesis True, this is an integer array of shape [# output features] whose values are indices into the input feature vector.

-

inverse_transform(X)[source] -

Reverse the transformation operation

Parameters: X : array of shape [n_samples, n_selected_features]

The input samples.

Returns: X_r : array of shape [n_samples, n_original_features]

Xwith columns of zeros inserted where features would have been removed bytransform.

-

predict(*args, **kwargs)[source] -

- Reduce X to the selected features and then predict using the

- underlying estimator.

Parameters: X : array of shape [n_samples, n_features]

The input samples.

Returns: y : array of shape [n_samples]

The predicted target values.

-

score(*args, **kwargs)[source] -

- Reduce X to the selected features and then return the score of the

- underlying estimator.

Parameters: X : array of shape [n_samples, n_features]

The input samples.

y : array of shape [n_samples]

The target values.

-

set_params(**params)[source] -

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as pipelines). The latter have parameters of the form

<component>__<parameter>so that it?s possible to update each component of a nested object.Returns: self :

-

transform(X)[source] -

Reduce X to the selected features.

Parameters: X : array of shape [n_samples, n_features]

The input samples.

Returns: X_r : array of shape [n_samples, n_selected_features]

The input samples with only the selected features.

feature_selection.RFECV()

Examples using

2025-01-10 15:47:30

Please login to continue.